S2E4: 20,000 Apps Under the Sea: Deep Dive into Vibe Coding Security

Vibe coding is allowing even non-developers to produce fully functional web applications by using LLMs to generate code – but how secure are they? In this episode of AppSec Serialized, special guest Bogdan Calin joins hosts Dan Murphy and Ryan Bergquist to talk about his research, which involved vibe-coding over 20,000 applications and analyzing them to learn what vulnerabilities and hardcoded secrets are most frequent.

Read the research article discussed in the episode: Security issues in vibe-coded web applications: 20,000 apps built and analyzed

Transcript

Dan Murphy: Hello and welcome to another episode of AppSec Serialized, the podcast about web security and those who practice it. I’m your host Dan Murphy and with me as always is my cohost Ryan. Ryan, say hello.

Ryan Bergquist: Good to be back, hey everyone.

Dan: Very, very good. And today we actually have a very special guest. We have Bogdan Calin, who is Principal Security Researcher here at Invicti Security. And we wanted to follow up on a bit of research that Bogdan recently published with his work on LLM security. And specifically, there’s a great blog that kind of goes into more technical detail than what we’ll be covering here today. I highly recommend it as a great read. But Bogdan, we’re gonna ask you a couple questions about that. But first off, hey, welcome to the show and we really appreciate having you on here.

Bogdan Calin: Thank you very much. Hello.

Dan: Excellent. So Bogdan, when you did your work – you effectively wanted to find patterns in LLM scanning and you generated over 20,000 LLM applications using a host of different technologies. It was a lot of work. So my first question is, how’d you come up with this? Like what inspired you to do the research and how long did it take to create 20,000 apps?

Bogdan: So basically the idea is that a lot of people now use LLMs to generate applications and sometimes now they generate completely full applications because it’s very easy and the LLMs now are good enough to generate like really functional applications. So I was just curious to see how is the code which is generated by these LLMs and I wanted to generate as many as possible to have like enough number of applications to have like some acceptable statistics because if you have too little of them is like not enough and you’re not sure that it’s just for that few applications.

It took me like basically… the generation didn’t take long. It was in a weekend basically it was I think two days to generate applications with OpenRouter. But then the analyzing of the application that stuff took a long time because it’s a lot of data and it’s very hard figuring out how to look on all of them in an automated way. And on a few of them, I had to look manually because some of the stuff you cannot just automate. You have to manually look on them.

Dan: Mm-hmm, and 20,000 apps is a lot. So that’s running a lot of LLMs with the faucet on wide open. A lot of tokens going through to create that, which is really, really cool. So you generated them with a variety of different technologies. In the article, you’ve got a graph that shows a pretty good distribution of a little bit of this, a little bit of that, because you were interested in finding the patterns that were common throughout all those different things.

So what was the work that went into getting a good distribution of technology. Did you have to modify prompts? Was it just a case of sliding that temperature slider up really, really high and letting the LLM take the wheel? Like looking at that chart, it was really interesting to see that there was a really good cross-section that really reflects the diversity with which our apps are built today. So how did you achieve that?

Bogdan: Yes, so it’s not easy to generate like different applications because when you generate an application, they usually generate like have a few of them common ones, and you use that one. So you have to vary a lot the prompt. So basically how I started, I started first by generating a list of technologies which are popular and I generated them from many LLMs together, like from GPT-5, from Claude, from Gemini. And I combined all of them together, generate a little bit of like requirements, like what blocks you need this application to have, for example, you need to have RBAC or you need to have like JWT or what kind of database you need. And I made a list of that stuff then I made a list of like requirements. For example, this one should only be static. This one should have Docker.

I made a lot of lists of requirements for this applications. And then I use a lot of LLMs. I mean, and then I made some other stuff like the theme of the application. The application should be, let’s say, about like, I don’t know, money management or something like that. All of this stuff is also generated by the LLMs. I generated a list of themes, applications, technologies, requirements, all of this stuff. And then you generate a lot of different prompts from all of them, combining all of them together. And you also vary the temperature because the temperature is important to get the LLM to generate something different, not like the common application which it normally generates. And then you just run it with OpenRouter.

And OpenRouter is like a website which is very helpful because it has this API and you can run in parallel multiple generations. So I had threads, you know, and had like around 20 threads and with different LLMs and generating with different prompts randomly selected from this database of prompts. And then basically I was asking the LLMs to generate files which are structured in a way, and I just follow this way and I save the files which were generated. I say that this is the path of the file which you generate and this is the content of the file which you generate as XML tags and then you just take the stuffs and then you save them to file and you have the applications. But it took like two days or something.

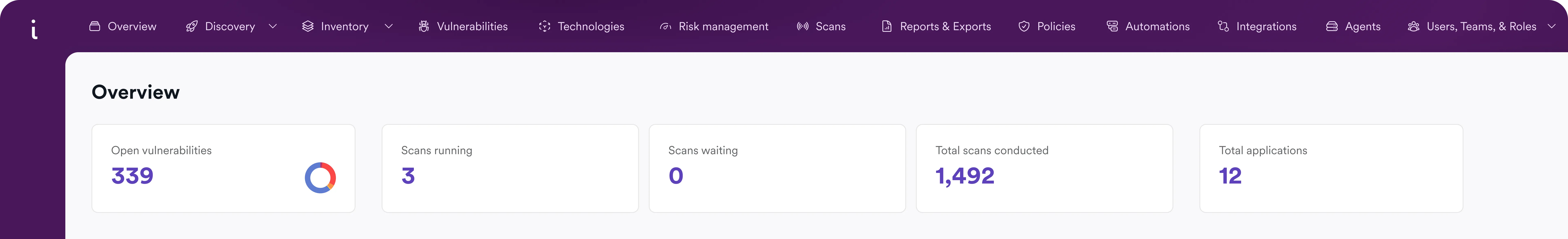

Dan: 20,000 applications. That’s very, very cool. So on that topic, Ryan, you talk a lot to our customers inside of the field. And as a security provider, we’re probably seeing a lot of people that have embraced LLMs. I know that’s changed the way that I build. And I imagine that for our customers, it’s pretty similar. But I can also see if 20,000 apps, that rate with which code can be produced now, it’s got to be making some people a little bit nervous. What are you hearing from customers of Invicti?

Ryan: Very, very nervous, right? Because a lot of times they’re creating these applications and security isn’t a part of them, right? So what we’re seeing is that some of these biggest organizations, they’re encouraging their developers to use all of these different LLMs to create applications for these use cases. I don’t think they’re creating them at the scale of 20,000 in a weekend, but they are creating them so fast. And what they want to do is make sure that in their pipelines, they’re still incorporating security. But there’s so many that are being created for so many different use cases. So it’s not just a specific language, a specific use case.

Security is trying to get in there and be incorporated to scan from SAST, from DAST, and all of those different types of scanners to make sure not only where we’re hosting that stuff is being protected, but the applications are secure. There’s not leaking information. They can’t obviously give us tons of token access. It’s really interesting and the fear is real that we’re seeing and because it’s just happening rapidly. Vibe coding, as we talked about it, you know, a few episodes ago. I heard about it, you know, a few months ago. But it has truly escalated to a point where most people are using it even if you’re not developers. You’re creating applications for things that can be useful in your day-to-day use case. So in that sense, applications are going to be live in your infrastructures and customer infrastructures and not a part of normal process.

Developers have a great process normally, going through your SDLC, adding security in there. And we’re really seeing that. But all these apps are now being created by other professionals that aren’t a part of that SDLC. So security folks, CISOs, want to make sure that security is staying a forefront of these applications. So it’s really cool. But I have to say, one of the most interesting findings Bogdan is that each of the LLMs has its own personality. When it comes to reusing those secrets with “supersecretkey” appearing in over, what was it, 1100 apps? Can you walk us through why this happens? Is this essentially the AI remembering bad examples from its training data?

Bogdan: Yeah, I think it’s about training data. So each LLM laboratory has different training data and they have different examples, parts of the code. I think the LLMs learn from this code. They learn these, let’s say, bad examples and they just repeat this example. For example, I remember that GPT-5 has this “supersecretjwt” which is very, very common for GPT-5. But Claude Sonnet has this another one which is called “your-secret-key-change-it-in-production”, which is very different.

Dan: I’ve seen that one a few times.

Bogdan: Yeah, I mean, you see it all the time from Sonnet, but it’s different on the LLM because they were trained on different data. And based on this data, they learn some patterns that here I have to generate something and it looks like this usually and I just repeat that stuff. And I’m not even sure exactly how you can actually fix this problem because it’s not easy to generate random data. There are a lot of very interesting complications created to generate random data. So basically, I’m sure LLMs are not able to generate random data. So they have to generate something which is more random than predictable secrets.

I’ve seen that, for example, the newer LLMs, have some kind of stuff that sometimes they include the name of the application or like the type of the application in the secret. You know, they say something like “cooking-site-key-change-in-production” or something. So instead of just saying “secret-key-change-in-production”, so maybe something like that they can do. But other than that, I’m not sure exactly what the LLM providers can do because it’s not easy to generate random data, especially by LLMs.

Ryan: Yeah, I mean, there was a huge range. As you mentioned previously, used GPT-5, you used Claude, Gemini, Deepseek, GWEN, and more to create these. So did you notice any patterns on how these certain LLM families behave or even fail when generating the source code itself?

Bogdan: I didn’t find some kind of like common vulnerability which applies to a lot of them. I mean, this stuff is the most common one. It’s these hard-coded secrets which are used in a lot of places. They are used to generate tokens. They are used to generate like passwords, API keys, this kind of stuff. So this is the most common pattern which I saw. I didn’t find, for example, like some kind of code vulnerability or mistake which appears in a lot of them. I mean, each one has some kind of mistake from time to time, but it’s nothing like common which is repeatable between LLMs.

But this stuff is repeatable because it’s like a common, not easy to solve problem. They have to put some kind of secret there. They look on an example code and the code has something like that there. So they were trained on this code and now they also learn to put something like that there, they put something like “yoursecretkey” or something like that, which it was in the train data which they found on GitHub or they found on the internet somewhere else. So this is the only pattern which I actually found. I found this hard-coded secrets and this also like predictable endpoints and predictable credentials. This is the common stuff between them.

Ryan: It’s definitely interesting because they’re all different, right? But they have that same issue that we’re seeing across all of them in their own way. So, you know.

Bogdan: I think it’s because the training data is shared. I think everybody’s training on the same internet wide collection of data and they see a lot of information which is repeated. I remember that, for example, there were some attacks on LLMs using tokens and the same attack worked on all the LLMs without any change. It was interesting, but it was explained because they use the same training data. I mean, it’s not exactly the same, but the same base. So each LLM has their own modifications and additions to make their model different, but the base is the same. It’s this huge collection of data from the internet.

Ryan: Definitely. So I want to walk through an exploit scenario with you. So a brand new app is deployed in production. The user can log in. RBAC looks good. Clear separation of user and admin controls. Let’s say a hacker pops open Chrome DevTools and sees a JWT token in the headers passed to the server. If the hacker suspects that the app was vibed up, what happens during the attack?

Bogdan: So if you are suspecting that an application is generated with an LLM, having a list of common secrets which are used by LLMs, you can try to do the following. So basically, first you take, for example, a JWT token, and you go and you first decode it first, and then you figure out what properties are there. But then you try to see if you can sign it, because if you can sign the JWT token, it means that you can also change it.

So the idea is that a JWT token is signed with a secret, which normally should be a secret key. But if that secret key is not secret because it’s predictable, anybody can sign that token. Usually, this JWT token has some kind of information which is related to what the user can do like for example, if you can read files or like it’s an administrator or something like that, you know. So if you know the secret key and you can sign the token back, you can change this information and then you can make yourself an administrator or you can make it to have access to other parts of the website which normally you don’t have.

So basically the idea is that you just have to take the token, decode it, see what you can change in this token, will make you, let’s say, give you higher privileges. And then try different common secrets and see if you can really sign the token. If you can sign it, you can just replace it in, like, for example, local storage, usually they are stored. And then you just refresh the application and you may receive administrative privileges or something like that based on what you can sign from the token.

Dan: Mm-hmm. Yeah, so like the knowing that secret lets you effectively issue new credentials. It’s like it’s like a teenager having access to a machine that prints driver’s licenses. All they have to do is just change that date of birth and instantly they get more admin privileges than they would normally. So that’s really cool.

So that’s how an attacker would manually exploit it. So Bogdan my question for you is: Invicti research has taken a look at this problem. And we’ve thought about how can we take that manual attack where you take the JWT, crack it, look at the bits, change your flags and be able to get access. How have we been able to automate that with both hard coded secrets? And you also mentioned in the article, other types of automated attacks that can be done against common registration authentication flows. So how can we apply that principle of the hacker at scale?

Bogdan: Yes, so basically we have a very large number of common secrets which are used to sign different tokens. JWT tokens is just one type of tokens, but there are many types of tokens which you can sign. And when we see these kind of tokens in the traffic, which for example, when we crawl or when we attack some application, we receive back different responses which may contain tokens. And when we see a token, we try to figure out if this token is using one of these tens of thousands of secrets.

And now we also have all these secrets from LLMs. So we have the most common. Actually, I took all the secrets from the LLMs, which I found, and are included this database now. And now we are testing for all the secrets which LLMs usually use when they are signing tokens. That’s about secrets. So basically, if you have an application generated by LLM and it generated a token, it’s probably that this the secret which is for token is in this database and then we can just check it and figure out this is the correct key and we raise an alert.

And then of course there are these endpoints which are common, like how you log into an application are unbelievably common between all the LLMs. For example, this api/login, api/register, and then the parameters which are used to log in or the parameters which are used to register. Then the credentials which are used are also very common between LLMs. It’s not a very big list. It’s like a small list, and you have something like, now it was before admin/admin and now it’s like admin at example.com with password, something like that. This is the common new LLM-generated credential, which is very common between applications.

So basically, in Invicti, we will try to test all of these endpoints, which are common. We’ll try to register a new user. And then if we are able to register a new user, we try to see if there is a token in the response and we test this token also. If we try to log in with predictable credentials and if you can log in, again, we raise an alert. These are the main points which were implemented in the scanner.

Ryan: Yeah, very, very interesting. It’s like same, same, but different. They have so many similarities in the way they create these endpoints, the levels of code. But there’s those small, interesting differences that each one of them, each one of the LLMs has. But Dan, I want to zoom out a little bit.

Vibe coding started this year, I would say. 2025 really, really started to get its you know, name with everything happening. And we saw the explosion of applications being created and, as you mentioned earlier, just the sheer amount of people vibe coding. So where do you think we’re heading in the next 12 to 18 months of this? Do you think LLMs will learn to stop hardcoding these secrets or, you know, will we need a new security layer completely to really compensate that level of maturity.

Dan: Yeah, it’s an interesting question. It really has been a huge paradigm shift. It’s changed the way that I’ve built stuff. Bogdan, know for you, it’s definitely changed the way that you build tools as well. And I think it’s here to stay without question. I do think that we are going to see changes in the way that the tools are leveraged.

One of the things that I’ve been into a lot recently is trying to make sure that you get better instructions when you’re generating some code. Been playing around with some spec-first paradigms whereby you kind of break up your coding tasks. Instead of giving like a one-shot that says “go off and make a whole app”, you’re instead kind of like taking all the guidance that you might traditionally have at a company, that is you know your internal documentation of we’re going to do this we’re going to do that, this is our policy for secret generation, and engineering it such that you get that stuff in the context window when you generate not the code, but you generate almost like the requirements for the code to be written.

And having that foundation of like almost like a constitution that governs how you build stuff, I think that we’re going to see more kind of formal adoption of those sort of processes that let you still leverage the vast ability to generate code at scale, but to put more guardrails on it from an organizational perspective that says, “Hey, look, we’ve thought about solving this problem in our org”. A bank is going to have very different requirements for code than your startup that’s trying to get to series A.

So I think that we’ll see a rise in changes in the way that we prompt the tools and changes in the way that those tools are leveraged to be able to produce outcomes that everybody can use. But I think it’s a huge deal. And, you know, very few of us... I used to hack assembly, right? When I was a kid and, you know, that’s not something I do any day, nowadays. I think we’re going to see a very similar thing where we’re going to see a shift to LLMs is one of the primary ways to generate code.

But it is no excuse for actually making sure that everything is secure because – Bogdan I think you were the one who said this originally – computer security, it’s a binary state. It’s a Boolean, it’s on or off. So even if you’re 99.9% secure, hey, you know, you still got a flaw. And if there’s one thing that my career has taught me thus far is that humans are incredibly creative and intelligent. You can find that tiniest of cracks in the wall and make their way through it.

So I think that from a security perspective, I think it’s going to become even more relevant. I think that as there are fewer eyes collectively, fewer humans taking a look at each line of code, that’s going to open up a lot of interesting vulnerabilities. So I think it’s going to be very interesting times. I’m excited. These tools are great. I use them all the time and I think they’re wonderful, but we’ve got to keep an eye out to make sure that, that we don’t end up producing an overall less secure software infrastructure.

But there’s a good note in the article. Bogdan did note that generally speaking, the quality of the code that is being emitted now, it’s much better than we saw before. I do believe that trusting in runtime security though is the only way to be sure, which is why being able to have tools that will actively probe, actively exploit and use the same sort of techniques that the bad guys would use on your app – I think it becomes more important than ever, but it’s without question an exciting time.

Ryan: Yeah, and maybe it’s ironic, but obviously our logo is the octopus, right? And octopuses are, they’re known for getting into those cracks and crevices and as small as you can get. I’ve seen those videos of them going through like a boat and –

Dan: Open a jar.

Ryan: Yeah, and they can weave their way into any small crevice. And that’s exactly what we want to do. We want to make sure that we can do that in those applications and weave our way into the smallest little crack of that 99.98%, we’re going to get in that 0.02 and find our way in to attack some of those LLMs. So I think, I think that makes sense for us as the octopus, as our logo, being able to do that.

Dan: Yep. Yeah. A little bit of fun internal lore. I remember once calling them the hacker of the sea during an internal branding conversation. So it’s a good, apropos logo.

Ryan: Yeah? That’s awesome. So I want to go a little bit more into SAST results. Bogdan, you mentioned that SAST results had false positives. What do you say about the ability of traditional static analysis tools and how they understand LLM-generated code, the structures and the patterns between them?

Bogdan: I did not find, like, actually, some good results from what I scanned. They were very rare. I mean, I think from thousands of alerts, there were only like maybe 20 which were like real. In the beginning I was checking all of them, but later I just checked like maybe like a few hundreds. But I mean, this is how it was working because, and there were also like a lot of duplicates, the same stuff repeated again and again and again. And I was just checking one, I didn’t check all.

But anyway, the idea is that most of them were just false positives because the SAST tools didn’t understand correctly the code or because the code was not exploitable in real life. So basically, I think they are just not trained, maybe, on this kind of new types of code. Or maybe they are trained, but it’s just hard to figure out, is this bug exploitable in real production or is it just a potential vulnerability. Like it’s like a potential vulnerability, but when you go in the, in the real life, like when you deploy this application, it’s not actually exploitable. I mean, I had very big problems to, to find something which was exploitable from all of these alerts. And I was like, kind of disappointed.

Dan: It is pretty cool. I still think that’s kind of neat to be able to do that at scale. And just the idea of giving a bunch of prompts and going off and letting a whole bunch of LLMs run at full tilt for a while and produce something is, it’s a pretty cool feeling. Whenever I’m using Claude Code, there’s that same feeling of saying like, all right, let’s go and implement and just run. The very important thing though is that those eyes on that code is super key. Because I think the faster that you get the ability to generate stuff, there really is gonna be more of a temptation to ship things without oversight. And that I think is going to be the most, potentially the most interesting thing that we’ll see in the coming years.

Ryan: I thought it was funny. We had an internal app we were creating for this use case, vibed up an app for finding some metrics and things. And during the call, the developer changed it with a requirement that we asked. And we made a joke of, oh, that was minus six tokens, minus six tokens. So it’s going to be an interesting concept of how many tokens are we going to be using today? And I think that is going be an interesting concept in new age of developing and vibe coding. So it’s pretty cool.

Dan: All right, hey guys, this has been a really cool conversation. Thank you both for your time. Bogdan, it’s always awesome to have your voice on here. And I would highly encourage everybody to check out that blog post. It’s definitely generating a lot of interest in the community and it’s a really good use of time to read that. So this has been another episode of AppSec Serialized. Thank you to everybody behind the scenes who helped make this possible. Our guests, my co-hosts, and most of all, to you, the listener. Thanks and have a great day.

Credits

- Discussion: Dan Murphy & Ryan Bergquist

- Special guest: Bogdan Calin

- Production, music, editing: Zbigniew Banach

- Marketing support and promotion: Alexa Rogers

Recent episodes

Experience the future of AppSec