S2E1: Revving the (Scan) Engine

At the heart of any DAST product is a scan engine that needs to be fast and accurate while keeping up with how the latest applications and APIs are being built and attacked. As AI-assisted development increases both the volume of code and its opacity, having an engine that can automatically and reliably test for security flaws without holding up releases is crucial for any serious DAST solution—and for its users. In this episode of AppSec Serialized, Dan Murphy and Ryan Bergquist look at the evolution of DAST and discuss how Invicti has combined the best features of Netsparker and Acunetix to create a new scan engine powering its AppSec platform.

Transcript

Dan Murphy: Hello and welcome to another episode of AppSec Serialized, the Invicti podcast about web security and those who practice it. I’m your host, Dan Murphy, chief architect and wearer of many hats here at Invicti Security. And I am pleased to announce that we have a new co-host here on the pod, Ryan Bergquist. Ryan, can you say hello and introduce yourself?

Ryan Bergquist: Absolutely. Thank you so much, Dan. My name is Ryan Bergquist. I am the Director of Field Engineering here at Invicti Security. I’ve been here for just over six years, and before that, I was in a role of a security engineer.

Dan: You’ve been in the trenches.

Ryan: Exactly, you got that right. So utilizing our tools, actually to be specific, the Acunetix tool, I was using that to test our internal and external web applications. I’ve been in this space for quite a while and very excited to share some of the knowledge that I have and learn some more about what else is in the industry and how we’re going about. So very excited to be here and very excited for the first episode of the second season.

Dan: Well, we’re very happy to have you. so on this podcast, we discuss the history, the challenges, and kind of the future of automated security testing, not just for applications, but also increasingly for APIs. And one thing that if you’re just coming back for season two, you may see something a little bit different: us. So we’re gonna be experimenting with a visual component of this pod. However, audio is still our number one, so we’re not going to have any fancy presentations or interactive displays of UIs or anything. We’re still targeting audio first, so you can put us on in the background and drive to work and learn a thing or two about security.

Ryan: The only thing I would say is if our faces scare you away, just turn them off and listen to the audio. We’re here for that as well.

Dan: No problem with that. So Ryan, it’s been a while since our last podcast and we’ve been pretty busy at Invicti, but let’s first start with some of our listeners might be new. And I’m curious if you could give us kind of a background on why the world needs DAST, why it’s so hard to build a good DAST and most importantly, and no pun intended, what we have been UP to recently here at Invicti.

Ryan: Yeah, so to start out. Why do we need DAST? The one thing that I say, and I get this from customers all the time, is why should we start with DAST? Why is DAST the first thing out there? And what I always say first and foremost is it’s what attackers are doing. Attackers are looking at your applications from the outside in. And that’s what a DAST scanner does. It looks at your applications from the outside in, testing a lot of different vulnerabilities from SQL injections, cross-site scripting attacks, all your zero-day vulnerabilities that you’re always worried about. What comes up? How are actors going to perform these payloads and attack methods? It’s truly interesting, and DAST gives you that approach where it’s not waiting for a pen test to go for a week at a time. These scans are running within minutes. And that helps you by just prioritizing what’s really needed.

And one other way that is really needed from a DAST perspective is compliance. You don’t really realize this, but DAST scanning is a part of most compliance frameworks out there. So in order to stay compliant with PCI, with SOC 2, you have to be running DAST scanning. So it’s a good market to be in when something has to happen. So customers out there with websites, they need to scan. They need to scan from a DAST perspective to find those vulnerabilities.

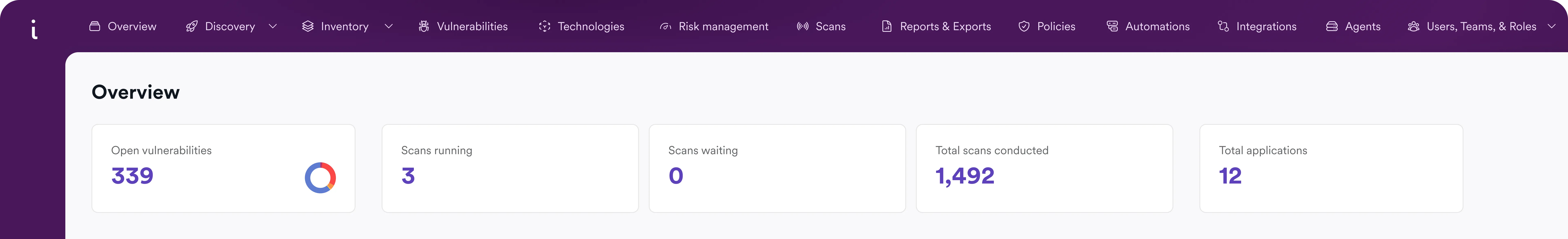

Now, what have we been up to? As you mentioned, UP. No pun intended. So for the viewers out there, as you all may know or may not know, Invicti as a whole bought and has had two companies underneath, Netsparker and Acunetix. So two very well-known DAST companies out there. And over the last three, four years, we’ve been really unifying the platform and unifying those two companies into one, being Invicti Security. So what we say is how we unified it in Unified Platform, that is UP, or the Invicti Platform as we have it now. So it’s currently out, we’ve released it into the wild and we have great webinars coming and everything from that, but within that, right, bringing those two companies into one.

What have we really done? We’re bringing the best engine, so the fastest engine on the market. I think we’re going to talk a little bit about that. We’ll probably get into that a little bit later.

Dan: It’s one of my favorite topics. Yep.

Ryan: And the other thing is a sense of an application, of what we’ve heard from our customers over the last five, six years and how applications are developing. What’s changing in the market that we also need to change for. And that’s all a part of this unified experience and having some level of application security posture management in it. So having all of your information in one place. A few other areas where it’s API security, creating BOLA or IDOR attacks, and things like that that people have been really searching for.

That’s what we’re bringing them with API security. Dynamic URL scanning. So when you’re in your pipeline builds, developers aren’t only spinning up one website at a time or just one website and testing on that, they’re spinning up multiple iterations daily. So your UUID scanning and things like that, all of that is being handled with our Invicti platform. So it’s truly unifying. All the feature sets that you’ve seen with Netsparker over the years, all the fast and amazing engine work that Acunetix has, and bringing it into one, which is the Invicti platform.

Dan: It’s the best it’s ever been.

Ryan: It really is. And we’ve been seeing an extreme growth in that. So that’s really what we’ve been up to.

And really curious, Dan, you’ve worked at a few different AppSec companies over the years, doing DAST, SAST, SCA… With all that knowledge and experience, what do you think has changed the most over time, over the last 10, 15 years, that we’ve been seeing and needing to make a change to?

Dan: Yeah, security has been wild, right? I, not to date myself, but I do remember reading like the original Phrack article that talked about SQL injection way back in the day. Text file, like, you know, very old school on a terminal. Hey, we still have SQL injection. Even 2025, it still happens. But I joke, but there has been a ton that has changed. So while some building blocks are still there, we’ve seen the web absolutely just explode in terms of like, the richness of applications.

Gone are the days when you had a form and that form, the sole kind of like place that you would talk to your back end was putting something into a form. Web apps are now full-fledged applications. In fact, I would go so far as to say that the web has become the primary means by which humanity in general is kind of interacting with machines, whether that be through single page applications, many of the applications we run even on our desktops today are just SPAs, single page applications. Increasingly, we’ve seen kind of a shift to that style.

What that has meant for DAST is that it’s become absolutely vital to leverage browsers, to do real world exploration, not just to kind of… It used to be enough to just look at web, look at like traffic and say, here’s an HTTP post. I’m gonna put in some bad parameters and see if it works out. But you know, those days are long since gone. So having kind of that richness of knowledge of, how a form works, how kind of an application works, all the different varieties and different sources into which injection can happen is absolutely important.

So browser usage is a big thing, SPAs are big, but the nature and the attack surface of applications have drastically increased. We’ve seen an explosion in terms of APIs where there are now tons of entry points that are simply hanging out there that are REST APIs, that are GraphQL APIs, that are just kind of like the unseen plumbing that it makes everything on the internet work. And the adage out of sight, out of mind definitely holds true. APIs are increasingly becoming something that is kind of unsecured and sometimes a little bit scary. There’s a lot of breaches that have happened that are due to things being out there or just someone trying something. And I believe that because it lacks a UI, some of the certain human ingenuity that happens when you’re testing it doesn’t happen.

You referred to before about IDOR and BOLA which are two things that we’ve got that are coming inside of the new platform, which is checks that can make sure that you have broken object level authorization, meaning, hey, can I go and, if I learn, Ryan, of the UUID that is one of your assets, if I send that in my API request, can I get your stuff? And that’s actually shockingly much more common than it used to be. And it’s continuing to explode as have the number of CVEs that are out there. And it’s like this exponential curve that keeps getting bigger and bigger and bigger and bigger.

That has actually made it much more important to practice what we call runtime SCA, which is to say, let’s take a look at the software that composes like your actual web app, but let’s take a look at it from a unique perspective, that of the attacker. With traditional software composition analysis, you’re looking at the, you know, the package.json, you’re looking at the requirements.txt, you’re looking at like the thing that is the manifest of what you know is inside of it, but it’s not the same stuff that the bad guy can see. What the bad guy can see is what you expose at runtime, what you expose on the wire, what your app says about the way that you built it. That has become so much more important because the business of exploitation has become automated.

Much in the same way that we’ve kind of seen the rise of LLMs that have absolutely changed the way that we write code, the way that we build apps. I think that attackers are also leveraging these benefits as well. I think that we’re seeing an increase in just a sheer, like not individualized approach to attack. So if you’ve got a web app, and that web app has a certain icon because it’s built on top of some middleware and that middleware all suddenly has a CVE that’s out there. People will find it by virtue, not of like looking for you individually, but by virtue of increased technology that lets you see everything that is out there, that lets the attacker automate their attacks. It’s getting a little bit scary out there.

And in terms of LLMs, that really is something that has been a dramatic change. For myself, it’s something that I’ve been quite enamored of as a tech. I’ve been playing around with all sorts of ways that can hook up LLMs with MCPs, with almost tools that it can use to be able to do some cool stuff. And we’re starting to do some interesting work in this area with our new platform that I’ll happily jump into. We’ll probably do a whole episode on it because it’s pretty cool.

But the rise of LLMs has led very much to the rise of so much code that is out there. I’ve said this before, but it’s almost like you’ve supercharged the engine and we can now go super, super fast, but the rest of the system, the brakes, the tires, none of that stuff was upgraded. So now we can, as developers, put that pedal to the metal and just fishtail right out of the parking lot and hopefully not wrap ourselves around a tree. But the volume of code, the volume of apps, the volume of LLM-aided tech that is being rushed into prod has never been higher.

So the landscape is fundamentally different than it was. And it’s one of the things that I always tell people whenever I interview them for security jobs is, I love security because it’s a game that never gets old. There’s always going to be something new. There’s always going to be some amazing new breakthrough. I love to read through like crazy exploits to see just like how human ingenuity has truly no bounds. That is part of what keeps this job exciting is that there’s always new stuff to learn, always new attacks against which to defend and always something cool that we’re cooking.

Ryan: Yeah, and think it’s helping others get smarter. It’s making them think smarter and it’s making it easier for bad actors to create attacks and become vigilant out there. So, and you have to be vigilant with all that level of risk.

Dan: Totally. Anecdotally, I’ve seen the rise of like spearfishing and like, you know, targeting emails so much better now. You know, we used to tell everybody like, you know, if you see a fishing attempt, it’s because it doesn’t look very good, but like it has never been cheaper to clone an absolutely perfect, pixel-perfect representation using the language, right? Of like, you know, training a model on, hey, send a corporate style communication, asking somebody to send it there. Attacks have gotten more sophisticated for sure. In fact, I think the attacker has benefited asymmetrically over the defender as stuff becomes cheaper.

But I get very excited about these parts that are underneath the hood. I get super excited and can talk about engines for a long time. But Ryan, y’know, you represent a different perspective. You’re from our field team and you are the voice of the customer. So my question for you is what are some aspects of the engine or a new platform that you find very exciting from a customer perspective?

Ryan: Absolutely. As long as DAST has been around, it’s just really about scanning. But one thing that has really stood out, and I think remains true today, it’s been throughout the history, but it’s speed. It’s how fast you can get through scanning an application. We in security do not want to hold up our development teams.

The rate that we are seeing pipelines being built and applications being built, posting builds daily, you need to have a fast scanner. You don’t want to hold people up because if you’re not running DAST as part of your pipelines, you may miss things or you may be halted from going to production. And we don’t want that to happen. So one thing that the engine, when it comes to performance, has needed to increase exponentially is the speed of the engine and the way we crawl. And something that the Invicti platform is doing really, really well, whether it’s using some AI crawling mechanisms to crawl deeper and more efficiently. I think with our deep scan, we’re crawling about 80% of the application within 20% of the time. So it’s really, really fast to go through all the endpoints, find every single parameter, and heuristically map that out so we can give that to the customer.

And the reality is, if we have the ability to crawl through fast, that’s exactly what a attacker can do too. Because the reality is, sometimes attackers use tools like this to help map out applications. Don’t do that. We don’t say do that. But yeah, that is something that people will do to help map out site maps and things like that. So when we can get through, scan through very, very fast it’s truly amazing. So that’s from a performance standpoint, really something that has always stood out with the Invicti engine. And the Invicti platform is no different. Because the reality, as I said, development cannot slow down. And when you have that, you’re building up tension when you have slow scans and things like that. When you’re having fast scans, maybe even incremental scans, so you’re only looking at the delta, that’s really what customers are looking for.

The other really cool thing I would say that the engine has been doing is comprehensive coverage. So comprehensive coverage, not necessarily that it can just understand every website, be language agnostic. Because obviously DAST in general is language agnostic, which is huge.

Dan: That’s a big deal.

Ryan: It doesn’t matter what it’s written in, but you can scan it because we’re looking at anything over HTTP, HTTPS, and it’s really as simple as that. But what I’m referring to when it comes to comprehensive coverage is identifying the language and scanning the application as it should be scanned. So whether it’s a WordPress site, a single page application, they handle a scanner differently and that’s what our scanner in the back end is going to do. It’s going to help to optimize what and how we need to scan. So those features are really very, very necessary for the customers to see and how the engine is being developed.

And I would say the last one is accuracy. With accuracy… Speed is great. I understand you need to have a fast scanner. But if you have false positives everywhere, speed means nothing. If you’re scanning everything in one minute and you have a bunch of false positives, developers are going to be mad. You’re gonna hold a bunch of builds. And that’s not what we do.

So when you have that engine being able to actually verify, so not only crawl fast, not only find all the heuristic and zero-day vulnerabilities out there, but also be able to confirm them, it brings a different aspect to DAST scanning and really understands why DAST needs to be first, why DAST should be the priority in your AppSec journey, I would say.

Dan: And that actually all comes down to the same currency: speed and accuracy. Ultimately, what we’re talking about is saving time. Accuracy saves time and endless meetings discussing what’s real, what’s not real. If you’ve been doing this for a while, I’m sure that you’ve had that experience of sitting in a meeting and asking yourself, you know, are these real? We’re spending so much time discussing these vulnerabilities, but are these five critical vulnerabilities in my software composition analysis? Can they actually be exploited?

One of the reasons that I really am a true believer in DAST is that you can go to a developer and you say, look, this is worth your time. I want you to stop what you’re doing and I want you to fix this critical severity bug. What we have done is invested in making sure that we are trying to tell you that only, only when it really does matter. And we’re including those proofs as well.

We’ve actually extensively invested and built out the number of proofs that we’re getting from the other side when we actually exploit something. This is for example, if our application is doing some SQL injection, not only is it not enough for us just to say, yeah, look, there’s SQL injection here, but we’re going to say: And I want you to take this seriously because, developer, what we’re going to do is exfiltrate some information from the back end.

We actually have some pretty interesting things that are used to do that. So we will sometimes do the type of exfiltration that’ll get us one character at a time from the name of a table. And we’ll slowly do these kind of Boolean queries over time to piece together all of these bits, one character at a time, of some information that identifies the database on the back end. Now the purpose of doing that, you know, which is frankly a lot of requests, a lot of like complexity is that you can go to your developer and you can instantly say like, yeah, we got you. And this is the proof of why, because we want to minimize that amount of time. And we desperately want apps to be at that minimal time between find it and fix it. And then appreciate it. So that it doesn’t get exploited by somebody else first.

That currency of getting it fixed fast is absolutely important and it truly is part of like the reason that DAST in my opinion is really kind of like the thing that you want to use as your clarifier on top of everything else. DAST will attack from the network and Ryan you alluded to this before, you said we’re network agnostic, right? We’re doing the same thing that somebody from the outside would do we’re using the same tools.

So when you’re actually testing with DAST, you’re doing something that anybody else on the internet who had access could do and that means when you find something that’s provable with DAST, you know that a bad actor out there, if they really wanted to, could do the same thing. Whether that is stealing a process table from something or forcing a DNS lookup to a remote host that is unique, that only we know about, or exfiltrating some data from a, you know, AWS private API to be able to say, hey, this is your IM key. All of these things are unique and all of these things are going to tell you this is real. This needs to be fixed.

And that’s why I am a true believer in DAST and I think we’re at the, we’ve got the sharpest DAST that we have had. So I’m pretty excited about it.

Ryan: It’s really incredible. And one thing I always like to show on that same thread is a command injection. When we perform a command injection, it’s one thing to do it, see a string and a response, and you’re like, yeah, that’s most likely successful. It most likely is real. But again, like you said, we go one step further with our testing. And for that command injection, since we’re on the OS, we’re going to spin up a shell box and start writing some commands. Can we pull back the task list? Can we pull back the whoami? That information you shouldn’t be able to get that from the web app. But usually, if we are performing command injection and it’s successful, we’re going to find that information. And so can an attacker.

Dan: Yep. And this is something we’ll probably talk about this as we do subsequent episodes where we talk about some of our LLM testing technology. But one of the things that’s crazy is as apps are increasingly hooked up to LLMs, some of the more traditional mechanism for determining whether or not you have remote code execution, sometimes the LLM, if you are trying to kind of you know, social engineer it into executing some sort of command, say you want to cat your /etc/passwd, right? Show me the list of things. LLMs are actually capable now of like synthesizing that and giving you the fake output and saying like, well, this is what /etc/passwd would look like. I don’t know why you’re asking me for it, but there it is.

So we actually have extra mechanisms that we can use when we’re doing those sort of tests of things like LLMs to absolutely confirm that they really are executing it and not just lying to us and saying, well, here’s the output of what you would have got if you did execute it. To me, that actually goes back to that evolving puzzle that is never quite complete, but is absolutely exhilarating to keep up with. So I hope that we get to talk about that in the future and some other episodes.

Ryan: Awesome. So I talked a little bit about an engine and performance, why it matters to the customer. But obviously, you’re a little bit closer to that engine realm in understanding how we took information from Netsparker and Acunetix, unified them so we had the best of both worlds. What was the process like to get there?

Dan: Yeah, so… Kudos to all the engineers who worked on this stuff, because it was pretty impressive. We took a pretty comprehensive approach. We had two great engines, both of which were very, very impressive by themselves. But what we wanted to do is create that Venn diagram of we’ve got the really great bits over here, we’ve got the great bits over here, but what we were very interested in is finding the union of those two circles, so that we can actually have the best of both worlds.

So that meant a pretty comprehensive gap analysis whereby the team did a ton of work to characterize all the different findings and create kind of a roadmap of, well, we’ve got this in this engine and that in this engine, and how can we take the best of this and the best of this and combine them? So there’s a lot of analytic work that was done internally to be able to find out where there were deltas between the two.

I already mentioned that we did a lot of good work with kind of beefing up some of the proofs that we collect. So the engine that we had right now, we expanded that with some of the knowledge of one of the other engines that was really good on some of the proofs. So we improved a lot there. We already were building on kind of a good legacy of speed and excellence and performance. And what we wanted to do is extend the number of signatures that we had. So we augmented that engine with some additional signatures that weren’t there, always with that goal of making it like as fast as we possibly can.

That currency of time as the ultimate determiner of value is so key because whenever we add a check to the engine, we don’t just want to have more checks because you can add tons and tons of checks and be comprehensive. What we want to do is always come up with the most efficient way to do that. For example, we’ll commonly employ kind of like these polyglot payloads rather than sending 10 individual requests and response, all of which can add up. If you’re sitting at 250 millis of lag, that can kind of like quickly get to over a second, and that matters when you’re doing a million requests in a given scan. What we’ll instead do is send one big payload that has a variety of different responses that can tell us the answer to what would have been 10 separate questions.

So we’ve done a lot of tricks like that to make things fast, to keep things efficient and to make sure that we stay on track. But we’ve also tried to build up a firing range of test applications that we can use to constantly kind of evaluate how the individual both the starting point of the two engines that we had, and then also kind of the ending point of where we wanted to get to. We did an analysis internally where we took a bunch of open source applications that are out there, things like DVWA, Juice Shop, kind of like available open source things. we pitted them against it.

You know, we put the engines in the arena and we kind of had them duke it out. And every time that we would find something, we would say, you know, we’re not content with having the score of this unified engine be down here. So we constantly were improving and improving, and it’s kind of cool. I put together a little visualization when I was talking to the e-team and it showed kind of how we’ve improved over time. And it was this graph that showed, you know, where we were, how it’s going and how it’s been. And it was, it was nice because it was one of those classic exponential curves that you always see that everyone always makes fun of in sales projections, but it was nice because you saw that ramp and you saw that constantly building up and getting better and getting harder, better, faster, stronger, and ultimately producing something that was the best that we’ve got.

Ryan: I would say it’s so interesting with those open source, those vulnerable web apps that are out there. They’ve been out there for years and years and years. I remember in school doing cyber forensics and we would use those, whether it was WebGoat, DVWA, Juice Shop, we’d use those to practice on and have fun with. And never I thought I was going to be the one here to test our product on those. So it’s truly a full circle moment there.

Dan: Yeah. And actually, Ryan, what’s actually kind of key is that a lot of those test apps are not intended for machines to scan. A lot of them are built and intended for humans to scan. So that’s been part of our challenge is that we want to make sure that we’re able to find all those vulnerabilities while at the same time, know, respecting the fact that those apps are, you know, in some cases kind of obfuscated.

We’ve actually done some interesting things whereby we can fingerprint some of the applications and maybe open a door that wasn’t previously there that’ll allow us to go in and actually do some of the testing. So we have kind of like a fingerprinting technology. It’s all kind of done in a way that’s transparent. We have, architecturally, our engine work such that we have kind of like every every DAST engine is really kind of like two big parts. You’ve got the reconnaissance and you’ve got the attack. You’ve got the crawler, which will typically launch a browser that its job is to click, click, click all over a website that will generate all these requests. The requests will be HTTP requests, and then you find out what parts of those things you can put code into.

So then you have your attack piece, which is your other part, and that is effectively taking all of those network requests and doing stuff. We actually have a pretty interesting architectural distinction in the new engine whereby all of those security checks we’re running kind of like in a dynamically distributable extra kind of piece, which we call it the security checks, but it’s got a couple of different pieces to it. It’s almost like a set of virus signatures. It’s distinct from our core engine code, which is nice in that it allows us to have a clean API that lets us move quickly and release those independently, but also kind of like encapsulate things. We’re actually using a very similar setup to the architecture of an actual web browser itself, whereby you’ve got the core bits, implemented in native code, super fast, super tight, but all the extensions are done at a scripting layer.

And we ourselves have this scripting layer where all those checks happen. We’ve now kind of extended that to in addition to having all of these kind of logic checks, we’re also now having signatures that are coming down that are being pushed in that have these passive checks for things like CVEs. The intent there is that we don’t always need to do like an active check where we’re gonna send something out. Sometimes we could just do things based on signatures.

So we’ve done a lot in extending that API and adding some new capabilities and some exciting new stuff whereby the security checks now have more power and more access to do cool stuff in the core engine than they have before. The fingerprinting tech that I talked about is just one example of that, but truly it’s in a much, much better place and we’re kind of excited for people to start using it.

Ryan: Yeah, it’s really amazing. All of that work, everything there, the amount of signatures going into it, new security checks, and we’re still eight times faster than we used to be. And just eight times faster than the market. No scanner is faster out there than doing this. And you have the level of checks that the team has created and continuing to enhance. So it’s truly an amazing accomplishment with this Invicti platform and everything that we’ve been up to here at Invicti.

Dan: Excellent. Well, I think that that is probably it for this episode. As I said, we’re going to have some time to do some deep dives in season two, where we’re going to get to go into the weeds on some of these interesting new features and talk about the story behind how they were built. Ryan, thank you very much for joining us. I’m very excited to be doing this new season with you and I look forward to several more of these in the future.

Ryan: Absolutely. I’m just happy to be here and look forward to bringing new content to everyone. So hopefully, we’ll have some fun guests on, industry-leading guests and things like that. So stay tuned. Sign up, and we’ll definitely have some more coming your way.

Dan: Excellent. And thank you to everybody internally and externally who helped make this this all possible. And thanks most of all to you, our listener. Have a great day. Bye bye.

Credits

- Discussion: Dan Murphy & Ryan Bergquist

- Production, music, editing: Zbigniew Banach

- Marketing support and promotion: Alexa Rogers

Recent episodes

Experience the future of AppSec