Sleeping on your application security? The bots are always wide awake

Every day, the world’s websites and web applications are probed and attacked by millions of requests from malicious bots. Resisting these continuous threats needs more than layered protection – it also requires continuous application security testing to harden the application itself.

Your Information will be kept private.

Begin your DAST-first AppSec journey today.

Request a demo

Cybercriminals, bad actors, malicious hackers – whatever you call them, we tend to think about “the bad guys” as actual humans who roam the web looking for prey. This leads to the misconception that your systems, sites, and applications are basically safe until somebody takes an interest and comes to attack them. In reality, web-facing systems are being probed and attacked every minute of every day by a tireless army of bots looking for weaknesses to exploit and report back to their evil overlords, and security must keep up or risk being overrun.

Crawling with malicious intent

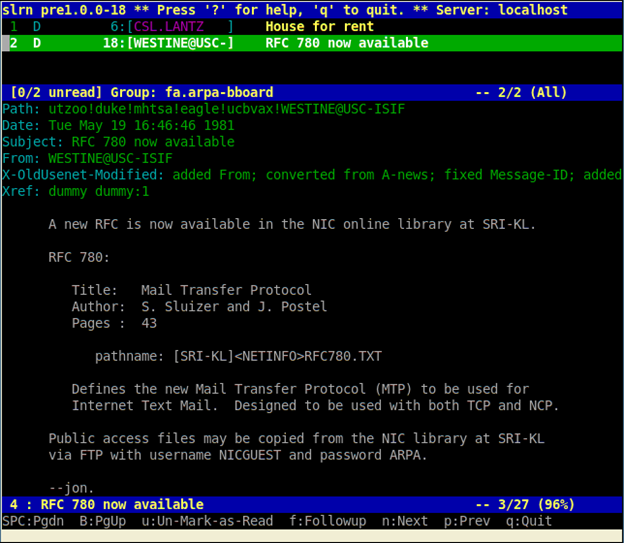

Whether we like it or not, traffic initiated by humans makes up less than 60% of all web activity, with bots making up the remainder – and malicious bots specifically account for over 27% (based on stats for 2021). Let that sink in: over a quarter of all web traffic is malicious bots. Many of these are actively probing for vulnerabilities by indiscriminately sending malicious requests and seeing what happens. Just as with spam or phishing, millions of these attempts will fail, but every now and again, one will succeed, phone home, and open the way for attackers.

The Akamai Web Application and API Threat Report for 2022 shows evidence of the intensity of automated probes against web applications. Of all the web attack attempts identified by Akamai, over 50% are attempts to exploit directory traversal and related vulnerabilities to access common files, most notably /etc/passwd. This system file exists on all Linux/Unix servers, and while it does not actually contain passwords, accessing it indicates that a web host might be vulnerable to other attacks. So if you get an isolated web request to access a very specific file path, that’s likely a bot looking for vulnerable systems – and with over a billion (!) such requests logged every day by Akamai alone, the web is positively crawling with them.

Vulnerable applications ripe for the picking

The data from Akamai also shows bot probes growing in intensity compared to 2021. In practice, this means that every live website and application is likely to be bombarded with automated malicious payloads every single day, from file access probes through attempts to connect to an existing web shell to more specific exploits targeting SQL injection and other common vulnerabilities. It’s clear that cybercriminals are rattling the virtual doorknobs on a massive scale, looking for a way in, and yet organizations routinely release web applications with known vulnerabilities and hope for the best.

To put a specific number on it, the recent Invicti AppSec Indicator report revealed that 74% of organizations release vulnerable applications often or always. The report goes deeper into the possible reasons, including the overriding pressure to release on time and the inefficiencies of security-related tools and workflows. The bigger issue, though, is that 45% of respondents said vulnerabilities make it into production quite simply because addressing them isn’t a priority. Most likely, these companies rely on their web application firewalls (WAFs) and other layers of protection, hoping that even if existing vulnerabilities are targeted, these outer shields will stop the attacks.

Closing all gaps at every level – starting with the application

Web application architectures and deployment models grow ever more complex, and the security race to keep up both with them and with the threat environment has sprouted a vast array of protection methods (and acronyms). Ideally, every layer of deployment should have its own protection, including WAFs in front of the application, cloud workload protection platforms (CWPP) keeping an eye on the cloud presence of that application, and traffic balancers and filters protecting against denial of service (DoS). And if you focus only on assembling that protection puzzle, it is easy to lose sight of the application that sits under all these blankets.

The perimeter defense mindset originates with traditional network security, where you would effectively build a wall around a tightly controlled internal network and all the applications in it. In the API-driven web era, there is simply no way to guarantee that you have identified and locked down all possible ways to access your web assets. So while you need (and should get) all the protection you can, application security must start with the application itself. Attack detection and blocking can go a long way, but with millions of malicious requests crossing the web and new exploits attempted every day, something will eventually get through the filters – and when it does, unaddressed vulnerabilities in the application could prove fatal.

Test your applications before someone else does

The AppSec Indicator data shows companies struggling to build fully secure applications without compromising their development cycles. Many reasons are named, from skill gaps to inadequate tools and unreliable data, all pointing to persistent problems with finding and remediating security issues in an efficient way. However, if security is treated as an inherent part of software quality, then (given the right tools) security testing can become a routine part of the development pipeline, just like any other type of testing. Once you can get exploitable security defects into issue trackers and address them like any other type of bug, releasing applications with no known vulnerabilities becomes a very real possibility. The latest solutions for dynamic application security testing (DAST) already offer these capabilities, with Invicti even providing automated vulnerability confirmation with Proof-Based Scanning.

Online threats constantly evolve and never desist, so even if you have no known vulnerabilities today, you could be vulnerable tomorrow. Again, the answer is testing, testing, and yet more testing, this time on your production applications. In this case, modern DAST is the only realistic approach to automatically testing entire application environments at scale. And just as the bots keep probing day in and day out, so a good DAST solution can scan your applications on a regular schedule, always using the latest checks known to the security community.

Because if you don’t test your applications, the bad guys will.